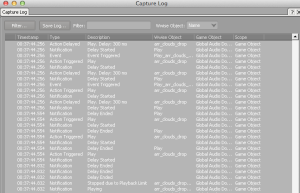

Blue sky out here this morning, so i’m having a Blue Sky day. *Update at foot of the article…! For the last couple of years while now I’ve been using Wwise to mix games. The run-time environment and routing is fast, intuitive and extremely flexible. Bus hierarchies and behaviours are some of the best control mechanisms I’ve found anywhere in any toolset for live mixing, and the suit of debug tools like the profiler and the voice monitor offer essential data flow visualization of things like memory, voice resources, loudness etc. The Voice Monitor window in combination with the capture log is fantastic, however, with a few extra features, it could really change video game mixing workflows. The Voice Monitor, when you start a capture, visualizes, in the form of a timeline, all the events that are triggered when connected to a live game, and it is this timeline visualization of events that is the key aspect to further developing this window’s functionality…

The resulting timeline is a history of all the events being stopped or started, when they were triggered, how long they lasted, if they faded in or out, and as well as info on their voice volume at that specific time. It is essentially a timeline recording of the game sound, and as you play through a connected game, every event that the audio engine triggers is reported and shown in the window. Let’s imagine for a second that Audiokinetic extend this feature and were to allow users to ‘record’, save (and even edit) these as ‘capture sessions’ (A new folder in the ‘sessions’ tab could be home to these). This would then allow multiple users to exchange these files and play them back in their Wwise project. This would turn a saved ‘capture session’ into a kind of ‘piano roll’ style event recording of game playthroughs, almost like a MIDI file, and here is where the real benefits for mixing come in, this would allow different people inside (or outside) the developer the ability to play through game using different play styles (from the one the audio implementer /or mixer has) – and to then allow a mixer to mix the game effectively for all these different styles by playing back their performance inside Wwise and tweaking accordingly. This is a kind of ‘performance capture’ of the player.

Player Styles

I used to see the impact different play styles had on a mix quite often while working on open-world games such as [Prototype], Scarface and [Prototype 2]. We’d spend a lot of time mixing the game with the audio team, slowly creeping around inside the game environment, making sure everything that needed a sound had a sound and that it was all balanced. We also made sure that we were following, what we thought were all the correct mission objectives and getting to all the way-points as directed to complete the missions. The game started to sound pretty good and pretty polished. Enter our QA lead to drive the game for a mix review with the leads or for a demo of the game to journalists. This guy played totally differently to us, moved through levels completely differently, had a completely unique, insane combat style and every time he played, he’d miss out all the low-level subtle stuff we’d spent a lot of time on, and go for these big flamboyant and flourish-filed ways of showing off the game. Jaws dropped. We could never play the game this way AND mix at the same time. This highlighted that mixing open world games (or really *any* game) wasn’t going to be as straightforward as we’d first thought – sure, we *knew* there wasn’t just one path through the experience, but we didn’t know just how different that path could be. I’ve often wondered how we could best capture and replicate different play styles without having to constantly pester people to come in and play for us while we tune the mix. Now, if we could spend a day getting 10 – 15 different players with varying kinds of play styles to go through levels in the game, while we recorded their playthroughs using an event-based capture session – one that allowed us to later replay these event logs, in real-time, and see/hear exactly what events were triggering, scrub back and forth, spend time zooming in on details, and zooming out again to consider the big picture of the mix, re-engineer and re-mix the audio – it would enable us to mix faster, mix for more interactive player focussed outcomes, and not have that odd feeling in your gut that the next time you see your shipped game in a Twitch stream, it might sounds kind of ‘not good’.

Video Sync

Another missing piece of this proposed capture system would be the ability to also drop a video file of the same captured gameplay onto the Voice Monitor timeline and slide it around to sync up with the event log. Saving out these capture sessions would then also include a reference to a video file, so that whoever was tasked with mixing or pre-mixing the level or project at that time, would have both the visual reference from the game, as well as all the audio inside the Wwise project to work with and tweak. (Video capture can now more easily be achieved with iOS output via the updates in the latest OS X – and capturing from console or screen capturing via a computer is also fairly trivial these days – I don’t anticipate Wwise being able to capture video, but it would be great to have the ability to drop in and sync up a video on the voice monitor timeline). Another thing that this kind of capture session style playback mixing would enable, is the ability for developers to hand over capture sessions to outside studios, which, with access to relevant Wwise project, would enable them to hear the game in a calibrated environment, particularly if those facilities are not available at the development location. Allowing fresh sets of ears to assess a game’s mix is an essential aspect to game mixing, and using these forms of captured gameplay would be an extremely cool way to achieve this.

There are also obvious benefits for extremely large teams who need to co-ordinate a final mix across multiple studios, and this could be perhaps achieved more easily by passing around these Wwise event capture sessions. There are certainly proprietary solutions that already take on board this recording and playback approach, and not just for audio but for all debugging, alas these only benefit those who have access inside their organizations. Similar reporting and visualization exists in other third-party engines too, and as these systems are already mostly developed align the right lines, with some extra functionality they could accomplish some of what I am thinking about here.

So, I don’t think it will be long before these kinds of log recording, playback and editing features becomes a reality within interactive mixing and game development. * Unknown to me, literally while I was writing this blog post, is that apparently this kind of feature is now available over in FMOD Studio. From Brett… “This is possible with FMOD Studio 1.06 (Released 10th April 2015). You can connect to the game, do a profile capture session, and save it. To begin with, the mixed output (sample accurate pcm dump) is available, and you can scrub back and forth with it while looking at all events and their parameter values. The next bit is the bit you’re after though. You can click the ‘api’ button in the profiler which plays back the command list instead of the pcm dump, so you get a command playback (midi style). If you want, you can take that to another machine that doesn’t have the game, and change the foot steps for a character to duck quacks if you want. You can do anything with it.”.

* Added support for capturing API command data via the profiler, when connected to game/sandbox * Added support for playback of API captures within the profiler, allowing you to re-audition the session against the currently built banks

This sounds like a great idea/feature. It reminds me of something that friends of mine do when they’re mixing front-of-house for large live concert situations (like orchestra plus rock band or a musical theatre show) – they can easily record every single channel through the digital mixer then play it back through the same mixer channels later when everyone’s gone home, meaning they can make better mix/processing decisions without being under pressure. And rehearse complex sections. I guess potentially even hand it all to someone else to learn/practise with.